President Biden’s executive order on AI

On Oct 30, 2023 President Biden signed an EO that lays out the guidelines and principles for the development and safe usage of AI applications[1]. An executive order is not a law, but it does influence future legislation and congressional actions. The EO applies to Federal agencies, and it is voluntary for private companies.

The scope of the EO entails policy areas such as Safety, Innovation, Civil Rights, and Government. The key highlights of the directives can be summed up as below:

- it requires developers of the most powerful AI systems to share safety test results with government agencies (e.g. models that involve serious risk to national security, or public health).

- the National Institute of Standards and Technology will set the standards for measuring AI safety and risk.

- the Department of Commerce will create watermarking technology to label AI-generated content.

- it supports collective bargaining in AI-driven industries, and calls for increased adaptive job training and education to help the workforce adjust.

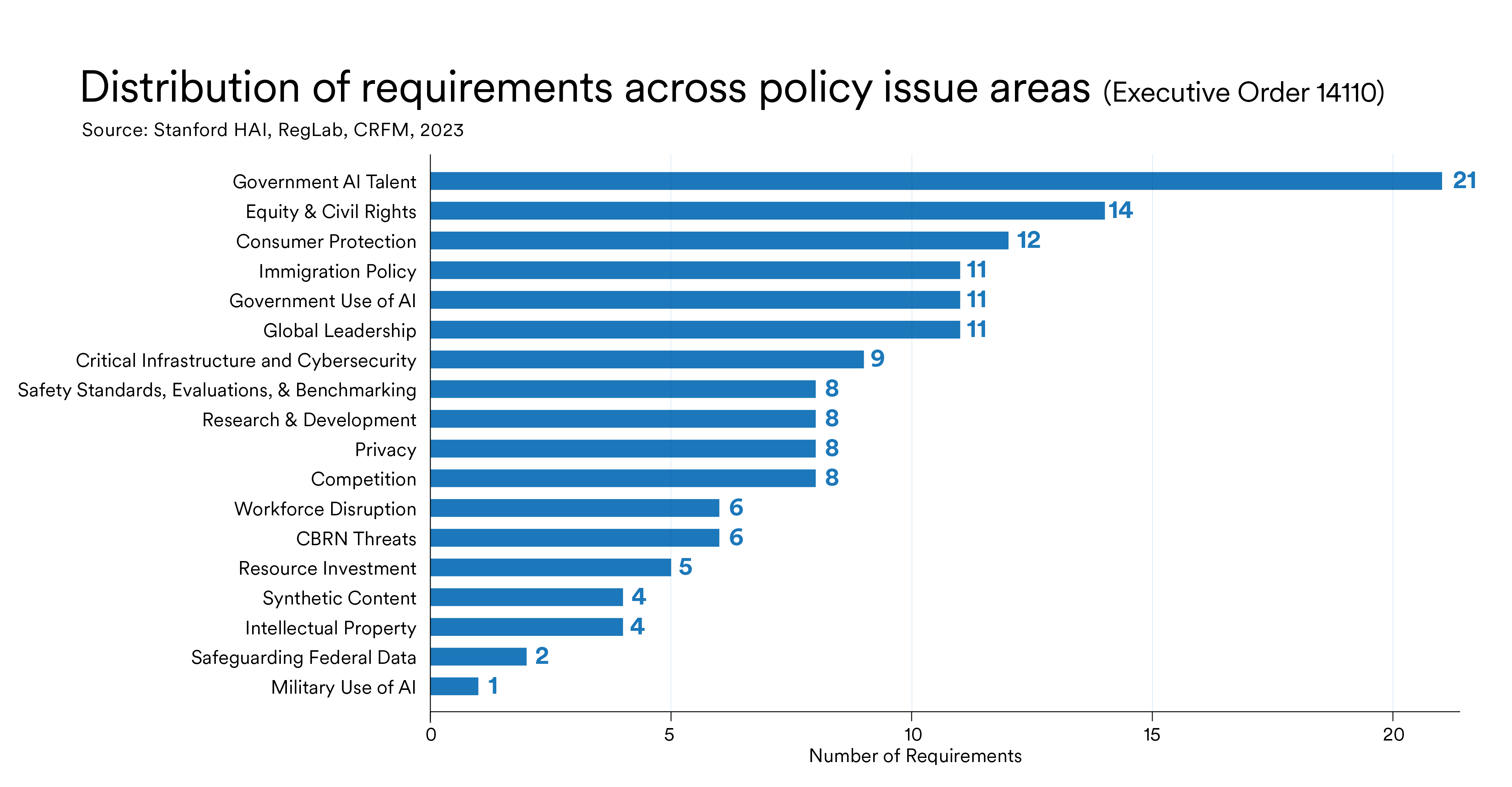

If we look at the distribution of requirements from the EO, it is easy to spot

the most urgent policy issues on the minds of the lawmakers, and where AI directives

can create the most social impacts. In the bar chart put together by Stanford University's

Human-Centered AI (see Figure 1), it is clear that the Biden administration is prioritizing

the recruitement of AI talents into agencies

(to help regulate the latest SOTA AI systems), mitigating risks for Civil Rights and

consumer protection, as well as AI application for immigration policy[3].

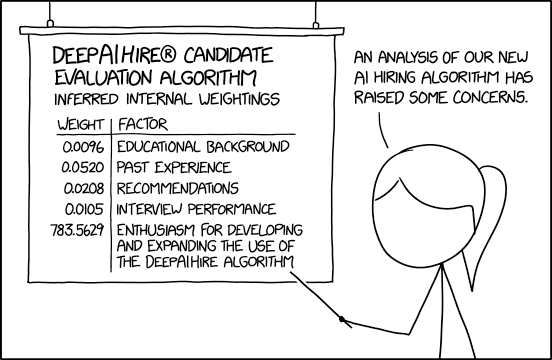

Interestingly, the EO was built up on voluntary commitments from big tech companies earlier this year, leveraging buy-in from the key AI tech players to set the best practices that others should follow. It is worth noting that the tech giants had plenty of time to lobby and influence AI regulatory measures such as watermarking and redteaming leading up to the signing of the EO[3], essentially shaping the way they want to be regulated. This is not surprising due to the lack of AI domain expertise inside the government.

The EU's AI Act

On December 8th, 2023, the EU reached a landmark agreement on the AI Act, which is the world's first comprehensive regulation for AI apps(the work started back in 2018)[4]. This sweeping legislation targets what it considers to be 'high-risk' AI applications - zooming in on tools with the biggest potential harms, like those used in hiring, education, or criminal justice. The developers working on those apps must prove safety, transparency, and fairness, and involve human feedback in their creation. An example requirement is the breakdowns of data used to train the models, and validation to show the software does not perpetuate biases (e.g racist surveilance camera). So applications like indiscriminate scraping of images to create facial recognition database would be banned.

Specifically, it defines 4 levels of risk in AI: unacceptable risk; high risk; limited risk; minimal or no risk [5]. Examples of unacceptable risk include:

- Biometric categorization systems based on sensitive attributes or characteristics.

- AI used for social scoring or inferring trustworthiness (sorry Black Mirror).

- Any AI system used to predict emotions in high stake situations like law enforcement, job performance, and education.

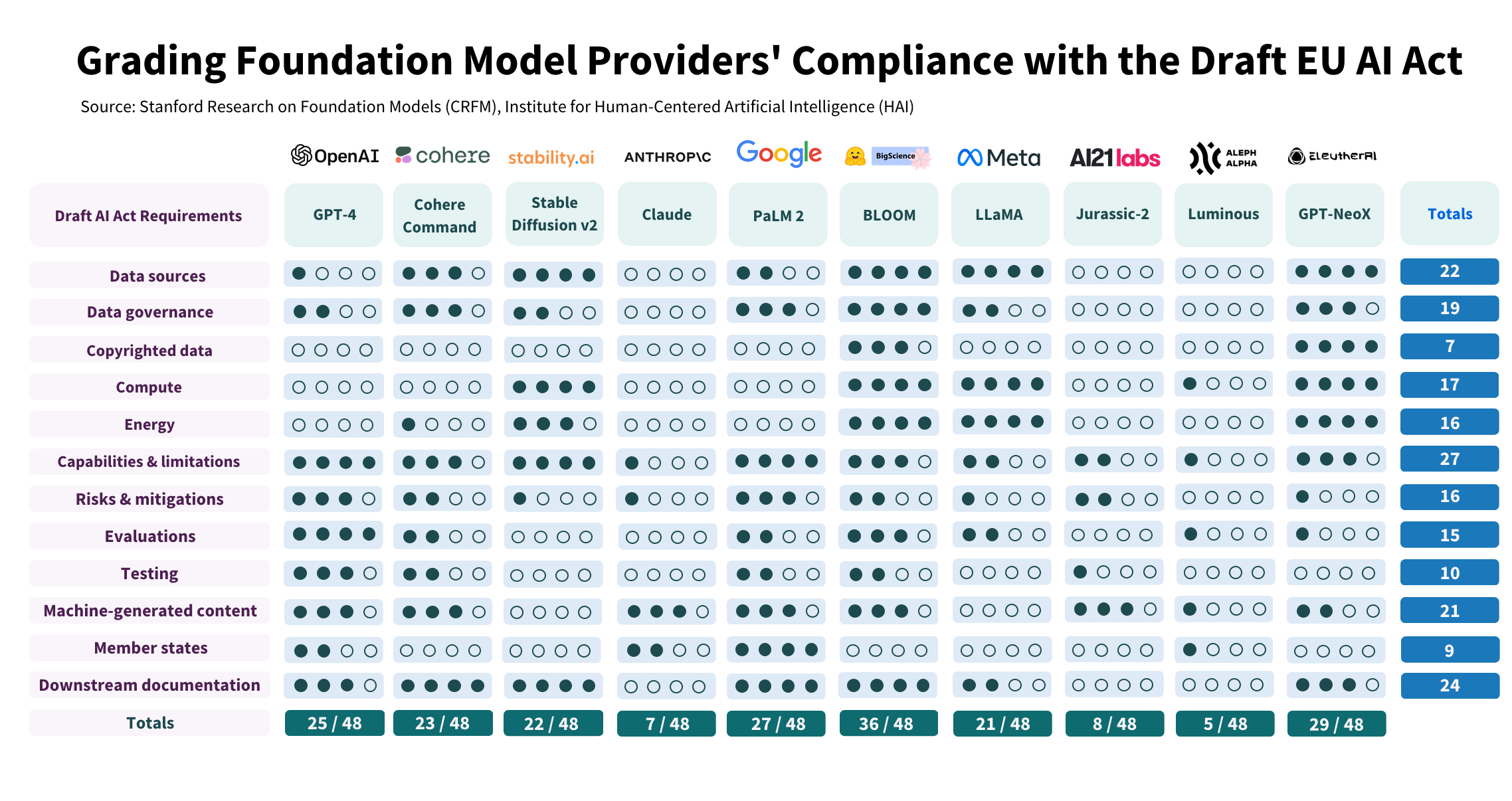

The EU's AI act has specific mandates for different categories of models, with foundation models being in their own category (as advocated by OpenAI). The latest draft of the AI act showed that there are 12 obligations for foundation model providers, and so far only one of the major providers, HuggingFace, has achieved a satisfactory score. No surprise that HuggingFace takes the lead since its business is centered on evaluating models and providing transparency on data sources.

Looking ahead

The AI regulation guide lines and regulations put forth by the U.S. and the EU will push AI developers to fully audit their system as well as the data sources. A good example is the recent RiteAid scandal, in which the FTC called out the "reckless use of facial surveillance systems" by the drug store on customers for nearly a decade. The system was more likely to accuse Black, Latino, Asian, and women customers of shoplifting, and the many false positives led to humiliation and harassment of the customers.

In the year ahead, we will start to see rapid standardization of prototyping for AI products and contents (e.g., Google's SynthID, or C2PA which uses cryptographic metadata as tag). More and more companies and contributors will address 'regulatory debts' and adopt some kind of standard practices for AI governance, making their product or platforms more trustworthy in the eyes of the law and consumers. With the big tech players having domain expertise and innovating on what President Biden described as 'warp speed', it is likely that they will continue to influence policy makers in the short term to ensure innovations (and business revenues) are not being hampered.

Some critics argue that the EU Act might stifle innovation, while others think the American executive order lacks teeth. What would be the most interesting to watch for is how local governments will navigate the waves of impending regulatory measures, and how the EU AI act impact the development of AI products for multinational tech corporation(e.g Google, Microsoft), and whether the lack of global harmonization will create unforeseen trade barriers.

REFERENCES

[1]Boak, J., O'Brien Matt, Biden wants to move fast on AI safeguards and signs an executive order to address his concerns, https://apnews.com/article/biden-ai-artificial-intelligence-executive-order-cb86162000d894f238f28ac029005059

[2]Meinhardt, C., Lawrence, C., et al., By the Numbers: Tracking The AI Executive Order, https://hai.stanford.edu/news/numbers-tracking-ai-executive-order?utm_source=Stanford+HAI&utm_campaign=28920a1aa9-EMAIL_CAMPAIGN_2023_11_14_07_07_COPY_01&utm_medium=email&utm_term=0_aaf04f4a4b-5a50e354e0-%5BLIST_EMAIL_ID%5D&mc_cid=28920a1aa9&mc_eid=4a937d5d21

[3]Sayki, Inci, Big Tech lobbying on AI regulation as industry races to harness ChatGPT popularity, https://www.opensecrets.org/news/2023/05/big-tech-lobbying-on-ai-regulation-as-industry-races-to-harness-chatgpt-popularity/

[4]Satariano, A., E.U. Agrees on Landmark Artificial Intelligence Rules, https://www.nytimes.com/2023/12/08/technology/eu-ai-act-regulation.html

[5]Hoffman, M., The EU AI Act: A Primer, https://cset.georgetown.edu/article/the-eu-ai-act-a-primer/

[6]OpenAI’s lobbying efforts: Balancing AI regulation and industry interest, https://dig.watch/updates/openais-lobbying-efforts-balancing-ai-regulation-and-industry-interest